Re-think the measurement of learning results (and the Kirkpatrick model) by seriously measuring performance

It’s a given that learning must have concrete and measurable business results. It’s also a given that learning professionals are required to show the hard numbers proving learning has a positive return on investment. Yet this is where things start to get tricky.

Measuring the business results of learning is often talked about, but real-world implementation of this often requires additional work. This is where the Kirkpatrick model is often invoked. The model is a very valuable tool, since it positively and conclusively communicates the fact that workplace learning should have concrete behavior and performance-related results. These are the four levels of the model:

- Level 1: reaction – are learners reacting positively to the event?

- Level 2: learning – did learners acquire the required learning (and retain it)?

- Level 3: behavior – did learners apply what they learned on-the-job?

- Level 4: outcomes – did learning result in the targeted outcome (i.e. change behavior and impact the business)

The “learning” and “performance” levels

The two lower “learning” levels of the model – ensuring that learning is well-received by learners and that it results in positive knowledge retention – are already measured by L&D professionals and fit naturally in the flow of training employees.

Yet, the next two “performance” phases of the model – made to check whether a behavior change occurred as a result of learning – are usually not within easy reach of the learning practitioner.

In this article, we will discuss how the two lower levels of the model (the “learning measurement levels”) are addressed by modern learning systems and how the two higher levels of the model (the “performance measurement levels” can be accomplished as well, without bringing in consultants or setting up laborious observations and measurements.

Measuring the “learning” levels

In fact, as elearning systems evolve, they grow to contain inherent assessment and feedback, almost obviating the need to assess and or ask for learners’ reactions in other systems. This can be done directly in the system.

For instance, throughout training “covert” assessment is carried out and there are many opportunities to ask for learner feedback. Think of an eLearning gamified system – some of the learning quizzes or simulations teach, but they can also be used for assessment or to gauge learner reactions. Repetitive learning and spaced repetition don’t just help learners retrieve and retain knowledge – they also measure learning retention over time. Similarly, additional questions such as the likelihood the user will apply the knowledge at work can be asked and the answers can be analyzed.

This is a great improvement over one-time assessment events which provide a snapshot of knowledge at a given point but don’t give enough visibility as to knowledge retention over time. The existence of many measurements over time can give L&D professionals a real understanding of whether the forgetting curve is at play, what types of knowledge are and aren’t retained, and can be very valuable in applying remedial learning.

Measuring the “performance” levels

The two next levels – the impact of learning on behavior and business results – are non-trivial, to say the least. This gap in the ability to communicate the true business impact of learning isn’t just cosmetic or a disadvantage of office politics. It also hampers the ability to apply corrective learning, truthfully evaluate learning outcomes and plan for better ones.

Yet learning professionals aren’t often at a place where they can measure performance easily or even have the basic visibility into these metrics. A simple case can be training on a new product and checking whether that new product is actually sold, but there are more complex cases like safety training, new processes, software etc. As a result, measurement isn’t done often and it is usually done at some (considerable) expense – the cost of measuring and making observations, the cost of guessing or receiving partial (and often biased) responses from employees.

The main ways of measuring the impact of performance are as follows:

- Self reporting (e.g. ticking a box): can be biased or incorrect or be based on a mistaken understanding of the required behavior (think overconfidence)

- Through external behavioral observations: require having people go around and make observations, may be inconclusive, labor intensive and make employees behave differently when observed

- By looking at general KPIs: for instance, checking new product sales across the entire team. This will “average out” results and may prevent identifying areas where more intervention is needed.

- By using performance integration: this requires integration of performance tracking, which will provide for the best performance and learning correlation, but can be expensive to develop in house

The future: performance integration for all learning

Platforms such as performance gamification platforms that tie learning and performance solve the problem.

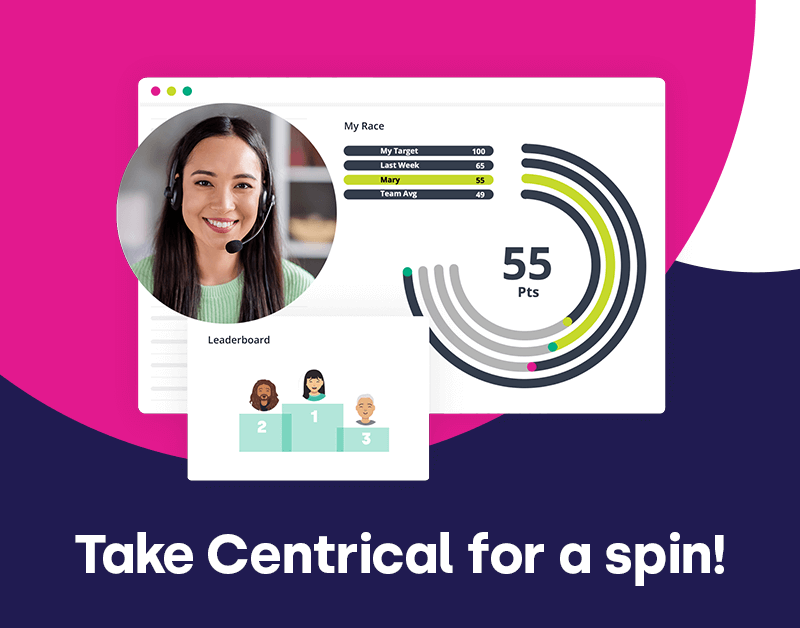

These platforms measure employee KPIs (measuring behavior through existing enterprise applications, such as a CRM), as well as learning. The result immediately covers the “performance” levels – since real-time performance, such as new product sales, proposals etc – can be tied to actual training, as well as the timing and repetition of training. This brings in a new era where all four levels of the Kirkpatrick model are covered and learning professionals can show, by clicking a report, the true business impact of learning.

Why it really matters

Tying performance to learning is even more thrilling when actual performance metrics can be used as a prompt for learning.

As you can see in the picture above (a shot of Gameffective’s user interface), when performance and learning are mixed together things begin to get interesting. In this case, the employee can see their performance metrics, and the color coding explains their achievement for that given time period.

The system tracks this performance and prompts (in the booster – the small lightning sign) a learning activity based on that specific KPI, taking into account past learning, actual performance and more. In this way, the most important learning is served to the employee on an almost daily basis, requiring 5-10 minutes of learning engagement – with the assurance that the most relevant learning is pushed to the employee.

When learning and performance are tracked, managers can determine how competent, knowledgeable and proficient users are, and platforms such as Gameffective’s platform can prompt learning automatically based on the understanding of learning and performance. This is the future of eLearning.

Engage and motivate your frontline teams

Improve performance with an AI-powered digital coach

Deliver world class CX with dynamic, actionable quality evaluations

Boost performance with personalized, actionable goals

Nurture employee success with the power of AI

Listen and respond to your frontline, continuously

Drive productivity with performance-driven learning that sticks

Drive agent efficiency, deliver client results

Keep tech teams motivated and proficient on products and services while exceeding targets

Maintain compliance while building customer happiness and loyalty

Enlighten energy teams to boost engagement

Engage, develop, and retain your agents while driving better CX

Improve the employee experience for your reservations and service desk agents

Madeleine Freind

Madeleine Freind

Natalie Roth

Natalie Roth Linat Mart

Linat Mart

Doron Neumann

Doron Neumann Gal Rimon

Gal Rimon Daphne Saragosti

Daphne Saragosti Ella Davidson

Ella Davidson Ariel Herman

Ariel Herman Ronen Botzer

Ronen Botzer